The Brane SDK provides comprehensive support for Kalray’s MPPA (Massively Parallel Processor Array) Coolidge processor, enabling developers to harness its unique architecture for high-performance, deterministic computing. This guide will walk you through creating a vector dot product application targeting both standard x86_64 processors and the Kalray MPPA Coolidge accelerator.

Project Structure for Kalray Applications #

A Coolidge-targeted project in the Brane SDK follows this structure:

dotProduct/

├── build.gradle # Build configuration

├── README.md # Project documentation

└── src/

└── main/

├── cpp/

│ └── oclDotProduct.cpp # Host

├── headers/

│ └── host_tps.h # Tracepoint

└── opencl/

└── DotProduct.cl # OpenCL kernel

This organization follows Brane SDK conventions, with source code organized by language and purpose.

Note: If you’ve completed previous tutorials in this guide, you can apply the same approach to create this project structure. The key difference is using the Coolidge-specific plugin in the

build.gradlefile.

Setting Up Your Kalray MPPA Project #

Let’s examine the components of our dot product application targeting the Kalray MPPA Coolidge processor:

Configure the Build File #

The build.gradle file for Kalray MPPA development has some specific configurations:

plugins {

id 'com.brane.coolidge.cpp' // Plugin for Coolidge MPPA accelerator

//id 'com.brane.cpu.cpp-application' // Uncomment to compile for x86_64 or GPU accelerator

}

application {

targetMachines = [

machines.linux.x86_64, // Standard x86_64 Linux host

coolidgeMachines.clusterOS.architecture("MPPA_v2") // Kalray MPPA Coolidge accelerator

]

// Configure linker settings for all binaries

binaries.configureEach {

def linkTask = linkTask.get()

if (toolChain instanceof GccCompatibleToolChain) {

linkTask.linkerArgs.addAll(['-ldl', '-llttng-ust']) // Link against dynamic linking and tracing libraries

}

}

}This configuration:

- Applies the Brane Coolidge plugin, which sets up the Kalray MPPA toolchain

- Targets both standard x86_64 Linux and Kalray MPPA Coolidge

- Configures linker settings, including libraries for dynamic linking and LTTng tracing

The coolidgeMachines.clusterOS.architecture("MPPA_v2") target specifies that we’re building for the Coolidge MPPA v2 processor running the ClusterOS operating system.

Tracepoint Header for Debugging #

The host_tps.h header defines tracepoints for debugging and performance analysis:

#undef MPPA_TRACEPOINT_PROVIDER

#define MPPA_TRACEPOINT_PROVIDER host

#undef MPPA_TRACEPOINT_FILE

#define MPPA_TRACEPOINT_FILE host_tps.h

#if !defined(_HOST_TPS_H_) || defined(MPPA_TRACEPOINT_HEADER_MULTI_READ)

#define _HOST_TPS_H_

#include "mppa_trace.h"

MPPA_DECLARE_TRACEPOINT(host, main_ENTER, (MPPA_TRACEPOINT_DEC_FIELD(int, val)))

MPPA_DECLARE_TRACEPOINT(host, main_EXIT, (MPPA_TRACEPOINT_DEC_FIELD(int, val)))

MPPA_TRACEPOINT_LOGLEVEL(host, main_ENTER, MPPA_TRACE_ERR)

MPPA_TRACEPOINT_LOGLEVEL(host, main_EXIT, MPPA_TRACE_ERR)

#endifTracepoints are a powerful tool for debugging and performance analysis on the Kalray MPPA platform. They allow you to:

- Insert lightweight logging at key points in your code

- Track program execution flow across clusters

- Measure the timing of specific operations

- Debug complex parallel execution patterns

OpenCL Kernel for Dot Product #

The DotProduct.cl file contains our OpenCL kernel implementation:

/*

* DotProduct Kernel: Computes the dot product of two vectors 'a' and 'b', storing the result in 'c'.

* Each work item computes a partial dot product for a segment of the input vectors.

* Copyright (C) 2019 Kalray SA. All rights reserved.

*/

// Define work group size if ENABLE_WG_SIZE is specified

#ifdef ENABLE_WG_SIZE

__attribute__((reqd_work_group_size(16, 1, 1)))

#endif

// Kernel function declaration

__kernel void DotProduct (__global float* a, __global float* b, __global float* c, int iNumElements)

{

// Get the global ID of the work item, which determines the data it will process

int iGID = get_global_id(0);

// Perform a boundary check to ensure the work item operates within the vector length

if (iGID >= iNumElements)

{

return; // Exit if the work item's ID is outside the bounds of the input arrays

}

// Calculate the index offset for the work item based on its ID

// Each work item handles a segment of four elements for vectorization

int iInOffset = iGID << 2;

// Perform the dot product operation for a segment of four elements and store the result

// The calculation involves element-wise multiplication of corresponding vector elements

// followed by addition of these products.

c[iGID] = a[iInOffset] * b[iInOffset] + // Multiply and add the first pair of elements

a[iInOffset + 1] * b[iInOffset + 1] + // Multiply and add the second pair

a[iInOffset + 2] * b[iInOffset + 2] + // Multiply and add the third pair

a[iInOffset + 3] * b[iInOffset + 3]; // Multiply and add the fourth pair

}Key aspects of this kernel:

- Each work item processes four consecutive elements (for vectorization)

- The kernel includes boundary checks to handle edge cases

- It applies the dot product formula: multiply corresponding elements and sum the results

- The kernel can be configured with a specific work group size when

ENABLE_WG_SIZEis defined

Host Application Code #

The oclDotProduct.cpp file contains the host application that initializes the OpenCL environment, sets up the data, executes the kernel, and verifies the results:

/*

* Copyright (C) 2025 Brane Technologies LLC - All Rights Reserved

* Licensed under the Apache License, Version 2.0 (the "License");

* you may not use this file except in compliance with the License.

* You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*

* Author: Brane Technologies

* Contact: nicolas@branetechnologies.com

* Description:

* This program performs vectorized dot product computation using OpenCL.

* It selects the most powerful OpenCL device, compiles the kernel, and

* runs it while verifying the computed results.

*/

#include <iostream>

#include <vector>

#include <fstream>

#include <sstream>

#include "opencl.hpp" // Include OpenCL-Wrapper

// Define number of elements

const int numElements = 16 * 16 + 13;

// Function to read a file's content into a string

std::string read_file(const std::string& filename) {

std::ifstream file(filename);

if (!file.is_open()) {

throw std::runtime_error("Failed to open kernel file: " + filename);

}

std::ostringstream content;

content << file.rdbuf();

return content.str();

}

// Main function

int main() {

try {

// List all available OpenCL devices

std::cout << "Listing all available OpenCL devices:" << std::endl;

std::vector<Device_Info> devices = get_devices();

// Display information for each device

for (const auto& device : devices) {

print_device_info(device);

}

// Select the device with the most FLOPS

Device_Info selected_device = select_device_with_most_flops(devices);

// Print the selected device information

std::cout << "\nSelected device based on the highest FLOPS:" << std::endl;

print_device_info(selected_device);

// Load kernel code

std::string kernel_code = read_file("DotProduct.cl");

// Initialize OpenCL environment

Device device(selected_device, kernel_code); // Create OpenCL device

// Allocate and initialize memory

std::vector<cl_float4> srcA(numElements);

std::vector<cl_float4> srcB(numElements);

std::vector<float> golden(numElements, 0.0f);

Memory<cl_float4> bufferA(device, numElements, 1, srcA.data()); // allocate memory on both host and device

Memory<cl_float4> bufferB(device, numElements, 1, srcB.data()); // allocate memory on both host and device

Memory<float> bufferC(device, numElements, 1); // allocate memory on both host and device

// Query the maximum workgroup size supported by the device

size_t max_workgroup_size;

selected_device.cl_device.getInfo(CL_DEVICE_MAX_WORK_GROUP_SIZE, &max_workgroup_size);

std::cout << "Max Workgroup Size for this device: " << max_workgroup_size << std::endl;

// Set the workgroup size to the minimum of the maximum allowed or 64

size_t workgroup_size = std::min(max_workgroup_size, (size_t)64);

// Load kernel source from file

Kernel dot_product_kernel(device, numElements, workgroup_size, "DotProduct", bufferA, bufferB, bufferC, numElements);

// Initialize input vectors

for (int i = 0; i < numElements; ++i) {

srcA[i] = {static_cast<float>(i % 200), static_cast<float>(i % 200), static_cast<float>(i % 200), static_cast<float>(i % 200)};

srcB[i] = {static_cast<float>((2 * i) % 300), static_cast<float>((2 * i) % 300), static_cast<float>((2 * i) % 300), static_cast<float>((2 * i) % 300)};

}

// Execute kernel

bufferA.write_to_device(); // copy data from host memory to device memory

bufferB.write_to_device(); // copy data from host memory to device memory

dot_product_kernel.run();

// Read results back

bufferC.read_from_device();

float* dst = bufferC(); // FIX: Use float* instead of std::vector<float>*

// Verify results

bool success = true;

int correctCount = 0; // Counter for correct results

for (int i = 0; i < numElements; ++i) {

float expected = srcA[i].s[0] * srcB[i].s[0] + srcA[i].s[1] * srcB[i].s[1] +

srcA[i].s[2] * srcB[i].s[2] + srcA[i].s[3] * srcB[i].s[3];

if (std::abs(expected - dst[i]) > 1e-5) { // FIX: Directly access dst[i]

std::cerr << "Mismatch at index " << i << ": " << expected << " != " << dst[i] << std::endl;

success = false;

} else if (correctCount < 5) { // Print only the first 5 correct values

std::cout << "Correct at index " << i << ": " << expected << " == " << dst[i] << std::endl;

correctCount++;

}

}

if (success) {

std::cout << "Success: Kernel executed correctly!" << std::endl;

} else {

std::cerr << "Verification failed!" << std::endl;

}

} catch (const std::runtime_error &e) { // FIX: Added curly brackets around catch block

std::cerr << "OpenCL error: " << e.what() << std::endl;

return EXIT_FAILURE;

} catch (const std::exception &e) {

std::cerr << "Error: " << e.what() << std::endl;

return EXIT_FAILURE;

}

return EXIT_SUCCESS;

}This host code:

- Identifies and lists all available OpenCL devices

- Selects the most powerful device based on FLOPS

- Loads the OpenCL kernel from file

- Sets up memory buffers for input and output data

- Determines the optimal workgroup size for the selected device

- Initializes the input vectors with test data

- Executes the kernel on the device

- Retrieves and verifies the results

Building, Simulating, and Running the Application #

With the Brane SDK, building applications for the Kalray MPPA is straightforward:

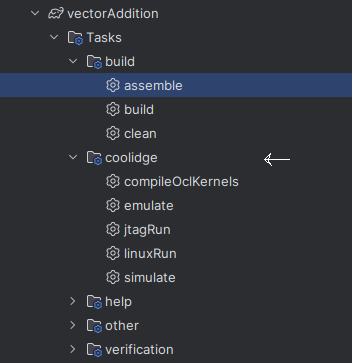

Building from IntelliJ IDEA #

- Open the project in IntelliJ IDEA

- Navigate to the Gradle panel

- Expand Tasks > build

- Double-click on “assemble”

Simulating on a x86_64 Host System #

- Open the project in IntelliJ IDEA

- Navigate to the Gradle panel

- Expand Tasks > coolidge

- Double-click on “emulate” (faster) or “simulate” (more accurate)

Expected Output #

> Task :DevToolkit:tutorial:accelerators:coolidge:basics:openCL:dotProduct:emulate

[Simulation] Running host application in QEMU simulation mode...

[Simulation] Copying /home/nicolas/Tools/brane-builder/brane-builder-plugin/DevToolkit/tutorial/accelerators/coolidge/basics/openCL/dotProduct/src/main/opencl/DotProduct.cl to /home/nicolas/Tools/brane-builder/brane-builder-plugin/DevToolkit/tutorial/accelerators/coolidge/basics/openCL/dotProduct/build/emulator/qemu/DotProduct.cl

Listing all available OpenCL devices:

|----------------.------------------------------------------------------------|

| Device ID 0 | MPPA Coolidge |

|----------------'------------------------------------------------------------|

|----------------.------------------------------------------------------------|

| Device ID | 0 |

| Device Name | MPPA Coolidge |

| Device Vendor | KALRAY Corporation |

| Device Driver | MPPA OpenCL Driver 1.0 (Linux) |

| OpenCL Version | OpenCL C 1.2 |

| Compute Units | 5 at 1100 MHz (5 cores, 0.176 TFLOPs/s) |

| Memory, Cache | 3584 MB VRAM, 16 KB global / 6400 KB local |

| Buffer Limits | 1792 MB global, 64 KB constant |

|----------------'------------------------------------------------------------|

Selected device based on the highest FLOPS:

|----------------.------------------------------------------------------------|

| Device ID | 0 |

| Device Name | MPPA Coolidge |

| Device Vendor | KALRAY Corporation |

| Device Driver | MPPA OpenCL Driver 1.0 (Linux) |

| OpenCL Version | OpenCL C 1.2 |

| Compute Units | 5 at 1100 MHz (5 cores, 0.176 TFLOPs/s) |

| Memory, Cache | 3584 MB VRAM, 16 KB global / 6400 KB local |

| Buffer Limits | 1792 MB global, 64 KB constant |

|----------------'------------------------------------------------------------|

|----------------.------------------------------------------------------------|

| Device ID | 0 |

| Device Name | MPPA Coolidge |

| Device Vendor | KALRAY Corporation |

| Device Driver | MPPA OpenCL Driver 1.0 (Linux) |

| OpenCL Version | OpenCL C 1.2 |

| Compute Units | 5 at 1100 MHz (5 cores, 0.176 TFLOPs/s) |

| Memory, Cache | 3584 MB VRAM, 16 KB global / 6400 KB local |

| Buffer Limits | 1792 MB global, 64 KB constant |

|----------------'------------------------------------------------------------|

| Info: OpenCL C code successfully compiled. |

Max Workgroup Size for this device: 16

Correct at index 0: 0 == 0

Correct at index 1: 8 == 8

Correct at index 2: 32 == 32

Correct at index 3: 72 == 72

Correct at index 4: 128 == 128

Success: Kernel executed correctly!

4. Running the Application #

- Open the project in IntelliJ IDEA

- Navigate to the Gradle panel

- Expand Tasks > coolidge

- Double-click on “linuxRun” or “jtagRun”

5. Expected Output #

When running on a system with a Kalray MPPA Coolidge processor, you should see output similar to:

Listing all available OpenCL devices:

Platform 0: Kalray OpenCL

Device 0: Coolidge

- Type: ACCELERATOR

- Vendor: Kalray

- Max Compute Units: 16

- Max Work Group Size: 256

- Global Memory: 2048 MB

- Max FLOPS: 1200 GFLOPS

Selected device based on the highest FLOPS:

Platform 0: Kalray OpenCL

Device 0: Coolidge

...

Max Workgroup Size for this device: 16

Correct at index 0: 0 == 0

Correct at index 1: 8 == 8

Correct at index 2: 32 == 32

Correct at index 3: 72 == 72

Correct at index 4: 128 == 128

Success: Kernel executed correctly!Code and Implementation Explained #

Let’s dive deeper into how this application works and understand the implementation details for Kalray MPPA:

OpenCL Kernel Analysis #

The dot product kernel is optimized for MPPA’s SIMD capabilities:

__kernel void DotProduct (__global float* a, __global float* b, __global float* c, int iNumElements)

{

int iGID = get_global_id(0);

if (iGID >= iNumElements) {

return;

}

int iInOffset = iGID << 2;

c[iGID] = a[iInOffset] * b[iInOffset] +

a[iInOffset + 1] * b[iInOffset + 1] +

a[iInOffset + 2] * b[iInOffset + 2] +

a[iInOffset + 3] * b[iInOffset + 3];

}Key optimization techniques used in this kernel:

- Vectorized Processing: Each work item processes four elements at once, which maps well to MPPA’s SIMD capabilities. The expression

iGID << 2is equivalent toiGID * 4, but using the bit-shift operator is often more efficient for power-of-two multiplications. - Boundary Checking: The kernel includes a check to ensure work items don’t process elements beyond the array bounds, which is essential for handling data sizes that aren’t multiples of the vectorization factor.

- Memory Access Pattern: The kernel uses a strided access pattern that processes consecutive elements within each work item, promoting efficient memory access on the MPPA architecture.

- Work Distribution: Each work item computes a single output value that represents the dot product of a 4-element segment, distributing the workload evenly across available processing elements.

Host Application Flow #

The host application follows a standard OpenCL workflow with MPPA-specific considerations:

1. Device Discovery and Selection:

std::vector<Device_Info> devices = get_devices(); Device_Info selected_device = select_device_with_most_flops(devices); The application automatically identifies available OpenCL devices and selects the most powerful one based on FLOPS. This ensures optimal performance on heterogeneous systems.

2. Kernel Loading and Compilation:

std::string kernel_code = read_file("DotProduct.cl"); Device device(selected_device, kernel_code); The kernel code is loaded from a file and compiled for the target device. This approach allows for runtime flexibility and adaptation to different MPPA configurations.

3. Memory Allocation and Data Initialization:

std::vector<cl_float4> srcA(numElements);std::vector<cl_float4> srcB(numElements); Memory<cl_float4> bufferA(device, numElements, 1, srcA.data()); Memory<cl_float4> bufferB(device, numElements, 1, srcB.data()); Memory<float> bufferC(device, numElements, 1); The application creates host-side vectors and device memory buffers. Note that input data uses cl_float4 vectors to match the kernel’s vectorized processing approach, while the output uses single float values.

4. Workgroup Configuration:

size_t max_workgroup_size; selected_device.cl_device.getInfo(CL_DEVICE_MAX_WORK_GROUP_SIZE, &max_workgroup_size); size_t workgroup_size = std::min(max_workgroup_size, (size_t)64); The application queries the device for its maximum supported workgroup size and selects an appropriate value. For MPPA devices, this is typically aligned with the number of processing elements in a cluster (usually 16).

5. Kernel Execution:

bufferA.write_to_device(); bufferB.write_to_device(); dot_product_kernel.run(); bufferC.read_from_device(); The application transfers input data to the device, executes the kernel, and retrieves the results. This explicit data movement is crucial for MPPA performance optimization.

6. Result Verification:

for (int i = 0; i < numElements; ++i) { float expected = srcA[i].s[0] * srcB[i].s[0] + srcA[i].s[1] * srcB[i].s[1] + srcA[i].s[2] * srcB[i].s[2] + srcA[i].s[3] * srcB[i].s[3]; // Compare with actual result } The application verifies the kernel results by comparing them with a host-side computation, ensuring correctness.

Memory Layout and Data Organization #

The way we organize data in memory can significantly impact performance when working with the Kalray MPPA. Let’s explore how our application structures data for optimal processing:

How Input Data is Structured #

In our application, we don’t just store individual floating-point numbers – we group them into sets of four. This approach matches how modern processors, including the Kalray MPPA, prefer to handle data.

// Creating vectors of 4-float elements

std::vector<cl_float4> srcA(numElements);

std::vector<cl_float4> srcB(numElements);

// Each cl_float4 contains four float values that can be accessed as:

// srcA[i].s[0], srcA[i].s[1], srcA[i].s[2], and srcA[i].s[3]Think of this like shopping for eggs – instead of buying individual eggs, we buy them in cartons of four. This makes both carrying them (memory transfer) and using them (processing) more efficient.

When we initialize these vectors, we fill each group of four with related values:

// Setting all four values in each vector element

for (int i = 0; i < numElements; ++i) {

srcA[i] = {static_cast<float>(i % 200), static_cast<float>(i % 200),

static_cast<float>(i % 200), static_cast<float>(i % 200)};

}How Memory is Accessed #

Our kernel is designed to take advantage of this grouped structure. Each work item (think of it as a worker in our parallel processing team) processes four consecutive values at once:

// The iInOffset calculation points to the start of a group of four elements

int iInOffset = iGID << 2; // Same as iGID * 4

// Then we process all four elements together

c[iGID] = a[iInOffset] * b[iInOffset] +

a[iInOffset + 1] * b[iInOffset + 1] +

a[iInOffset + 2] * b[iInOffset + 2] +

a[iInOffset + 3] * b[iInOffset + 3];This approach has several benefits:

- The processor can load four values in a single memory operation

- Values that will be processed together are stored together

- The MPPA’s vector processing units can work efficiently on groups of data

How Output Data is Structured #

The output of our dot product operation is simpler – it’s just an array of individual float values:

// Output is a single float per calculation

Memory<float> bufferC(device, numElements, 1);Each output value represents the dot product result for a group of four elements from each input vector. This makes sense because a dot product ultimately produces a single value from corresponding pairs of elements.

This organization of our data – grouping input values, processing them together, and producing individual results – allows us to make the best use of the MPPA’s capabilities for high-performance computing.

Understanding Workgroup Sizes #

Workgroups are fundamental to how parallel processing happens on the MPPA. Think of workgroups like teams of workers tackling different parts of a problem simultaneously.

Matching Teams to the Hardware #

The Kalray MPPA processor is built with clusters of processing elements. For optimal performance, we want to align our workgroups with this physical structure:

// This annotation requests a specific workgroup size

#ifdef ENABLE_WG_SIZE

__attribute__((reqd_work_group_size(16, 1, 1)))

#endifThe size of 16 is not arbitrary – it matches the number of processing elements typically found in a Coolidge MPPA cluster. This is similar to how you might organize 16 workers into teams that match the number of available workstations.

Adapting to Different Hardware #

Our application is designed to work on various devices, not just one specific MPPA configuration. To ensure compatibility, we query the device for its capabilities and adjust accordingly:

// Ask the device what workgroup size it supports

size_t max_workgroup_size;

selected_device.cl_device.getInfo(CL_DEVICE_MAX_WORK_GROUP_SIZE, &max_workgroup_size);

// Choose the smaller of what the device supports or our preferred maximum

size_t workgroup_size = std::min(max_workgroup_size, (size_t)64);

// Now use this workgroup size when setting up the kernel

Kernel dot_product_kernel(device, numElements, workgroup_size, "DotProduct",

bufferA, bufferB, bufferC, numElements);This approach is like having flexible team sizes based on the available workspace – we adapt to make the best use of whatever resources are available.

How Work is Distributed #

Understanding how work gets divided among teams is crucial for performance:

- Our total problem (processing all vector elements) is divided into workgroups

- Each workgroup runs on a single cluster within the MPPA

- Within a workgroup, individual work items process their assigned elements

The ideal balance varies based on your specific problem and MPPA configuration. Generally:

- Too few workgroups might leave some clusters idle

- Too many tiny workgroups can increase scheduling overhead

- Uneven workgroup sizes might cause some teams to finish early and wait

Finding the right balance through experimentation can significantly boost performance for your specific application.

Understanding Key MPPA Concepts #

When developing for the Kalray MPPA architecture, it’s important to understand several key concepts:

1. Cluster-Based Architecture #

The Kalray MPPA Coolidge processor is organized into compute clusters, each containing:

- Multiple processing elements (PEs)

- Shared local memory

- DMA engines for data transfer

- Network interfaces for inter-cluster communication

This distributed architecture allows for highly parallel execution but requires careful consideration of data placement and movement.

2. OpenCL Execution Model on MPPA #

OpenCL on the MPPA platform has some implementation-specific characteristics:

- Work-items within a workgroup are executed on the same cluster

- Local memory maps to the fast cluster-local memory

- Global memory access may involve NoC (Network-on-Chip) traversal

- Memory transfers between global and local memory can be optimized using DMA

3. Optimizing for Kalray MPPA #

Here are some optimization strategies specific to the Kalray MPPA architecture:

- Use Vectorization: The Kalray MPPA supports SIMD operations, which our kernel leverages by processing four elements per work-item.

- Optimize Workgroup Size: The workgroup size should be chosen based on:

- The number of processing elements in a cluster

- Memory access patterns

- Register usage

- Leverage Local Memory: Use local memory (cluster-shared memory) for frequently accessed data to reduce NoC traffic.

- Minimize Global Memory Access: Global memory access involves the NoC and has higher latency than local memory access.

Debugging Kalray MPPA Applications #

The Brane SDK integrates with Kalray’s debugging tools for MPPA applications:

Using Tracepoints #

Tracepoints, defined in the host_tps.h file, can be inserted at critical points in your code:

#include "host_tps.h"

int main(int argc, char* argv[]) {

// Emit a tracepoint at the start of main

MPPA_TRACEPOINT(host, main_ENTER, 0);

// Application code here

// Emit a tracepoint at the end of main

MPPA_TRACEPOINT(host, main_EXIT, 0);

return 0;

}These tracepoints can be collected and analyzed to understand program flow and timing.

Memory Analysis #

Memory access patterns are critical for MPPA performance. The Brane SDK includes tools to analyze memory access patterns and identify potential bottlenecks:

- Identify excessive global memory access

- Detect uncoalesced memory operations

- Optimize data transfers between clusters

Performance Profiling #

To profile Kalray MPPA application performance:

Enable profiling when running the application:

KVXOPENCLPROFILING=1 ./build/exe/main/debug/dotProductThis environment variable activates detailed performance metrics collection that can help identify bottlenecks.

Optimizing for Kalray MPPA #

To get the best performance from the Kalray MPPA architecture, consider these optimization strategies:

Optimize Workgroup Size #

The optimal workgroup size depends on:

- The number of processing elements in a cluster

- Memory access patterns

- Register usage

For the Kalray MPPA, workgroup sizes that are multiples of the number of processing elements per cluster (typically 16) often perform best.

Leverage Vectorization #

The Kalray MPPA supports SIMD operations. Our kernel leverages this by processing four elements per work-item:

c[iGID] = a[iInOffset] * b[iInOffset] +

a[iInOffset + 1] * b[iInOffset + 1] +

a[iInOffset + 2] * b[iInOffset + 2] +

a[iInOffset + 3] * b[iInOffset + 3];This vectorized approach can significantly improve computational throughput.

Minimize Global Memory Access #

Global memory access on the MPPA involves the NoC and has higher latency than local memory access. Where possible:

- Use local memory for frequently accessed data

- Structure algorithms to maximize data reuse

- Consider explicit data prefetching for predictable access patterns

Balance Computation and Communication #

For complex applications, balance the computational workload with communication overhead. The cluster-based architecture of the MPPA works best when:

- Data is processed locally within clusters when possible

- Inter-cluster communication is minimized and well-structured

- Workloads are evenly distributed across clusters

Common Issues and Solutions #

| Issue | Possible Cause | Solution |

| Kernel fails to compile | Unsupported OpenCL features | Review Kalray OpenCL documentation for supported features |

| Poor performance | Inefficient memory access patterns | Optimize data layout and access patterns for the MPPA architecture |

| Workgroup size errors | Exceeding device limits | Query the device for maximum workgroup size and stay within limits |

| Incorrect results | Boundary condition issues | Ensure proper boundary checking in kernels |

| Program crashes | Missing dependencies | Verify all required libraries are installed and properly linked |

Next Steps #

To further develop your Kalray MPPA programming skills with the Brane SDK:

- Explore more complex algorithms that leverage the MPPA architecture

- Implement multi-kernel pipelines for data processing applications

- Utilize Kalray-specific features for deterministic computing

- Combine MPPA with other accelerators in heterogeneous applications

The next sections in this documentation provide more detailed information on advanced MPPA programming techniques and integration with other components of the Brane SDK ecosystem.